Test and secure your LLM apps

Scan your code for security risks, hallucination triggers, and performance issues

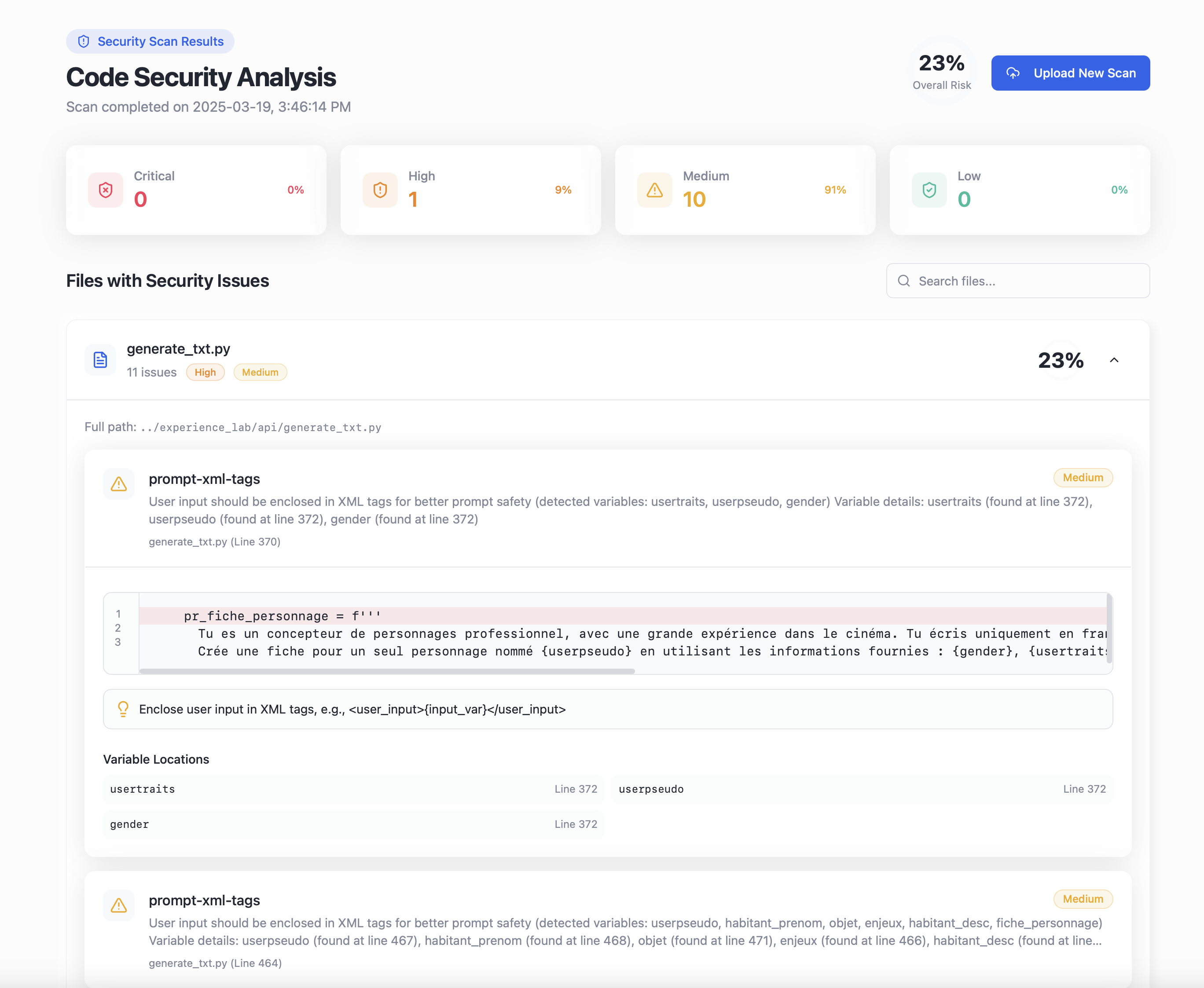

Identify LLM Code Risks

Our scanning technology analyzes your LLM applications to detect security vulnerabilities, performance bottlenecks, and potential output quality issues. Protect your AI systems from prompt injection attacks, inefficient token usage, and hallucinations with comprehensive scans.

Issue: chain-unsanitized-input (high)

Location: app/handlers.py:24

Message: Untrusted input 'query' flows to LLM API call without proper sanitization

Suggestion: Implement input validation or sanitization before passing untrusted input to LLM.

Security Risks

Prevent prompt injection attacks and unsafe execution vulnerabilities by identifying unsanitized user inputs.

Issue: prompt-long-list-attention (medium)

Location: utils/prompt_builder.py:52

Message: Potential attention issues: Long list 'items' is programmatically added to prompt. LLMs may struggle with attention over many items.

Suggestion: Consider chunking approach or using vector database for retrieval.

Performance Issues

Improve model performance and reduce token usage by identifying inefficient prompt structures.

Issue: output-structured-unconstrained-field (medium)

Location: models/schemas.py:28

Message: Field 'email' in model 'UserProfile' lacks constraints for type 'str'

Suggestion: Add validation using Field(pattern=r'[^@]+@[^@]+\.[^@]+', description="Valid email address")

Output Quality

Prevent hallucinations and ensure reliable model outputs with proper validation and constraints.

Advanced Detection Capabilities

Prompt XML Tag Safety

Detect improperly structured XML tags in prompts that could lead to confusion or security issues.

Subjective Term Detection

Identify subjective terms like "best" or "important" that need better definition to avoid biased outputs.

Inefficient Caching

Find opportunities to improve prompt caching for better performance and reduced API costs.

LangChain Vulnerabilities

Specialized analysis for LangChain code, including RAG vulnerabilities and unsafe input handling.

Customize Your Security Workflow

Custom Scanners

Create and integrate your own custom scanners to detect domain-specific issues unique to your application or company policies.

Comprehensive Logging

Track all scan activities with detailed logs that capture severity levels, timestamps, and remediation status for compliance and auditing.

Export JSON Reports

Generate detailed JSON reports for easy integration with CI/CD pipelines, dashboards, and other security management tools.

Pricing

- All code scanning features

- Console reporting

- Comprehensive reporting (JSON, logs)

- Community support via GitHub

- All Community features

- Jira issue tracking

- Custom scanner development

- Team dashboard & security metrics

- Email and priority support

- All Enterprise features

- Deploy within your own infrastructure

- Security policy enforcement

- SSO and access control

- Dedicated support with SLA

Ready to Secure Your AI Applications?

Start with your first scan!